LLM Data Preprocessing Preprocessing text data is one of the most important steps in training Large Language Models (LLMs). The first step in this process is tokenization — the act of converting raw text into smaller, manageable units called tokens. 1. Tokenization Tokenization can be broadly categorized into three …

Read More

📘3. Positional Embeddings 🧠 Why Do We Need Positional Embeddings? In embedding layer, the same tokens get mapped to the same vector representation. That means the model naturally has no idea about token order. For example, consider these two sentences: ✅ Dog bites man ❌ Man bites dog Same words, totally different …

Read More

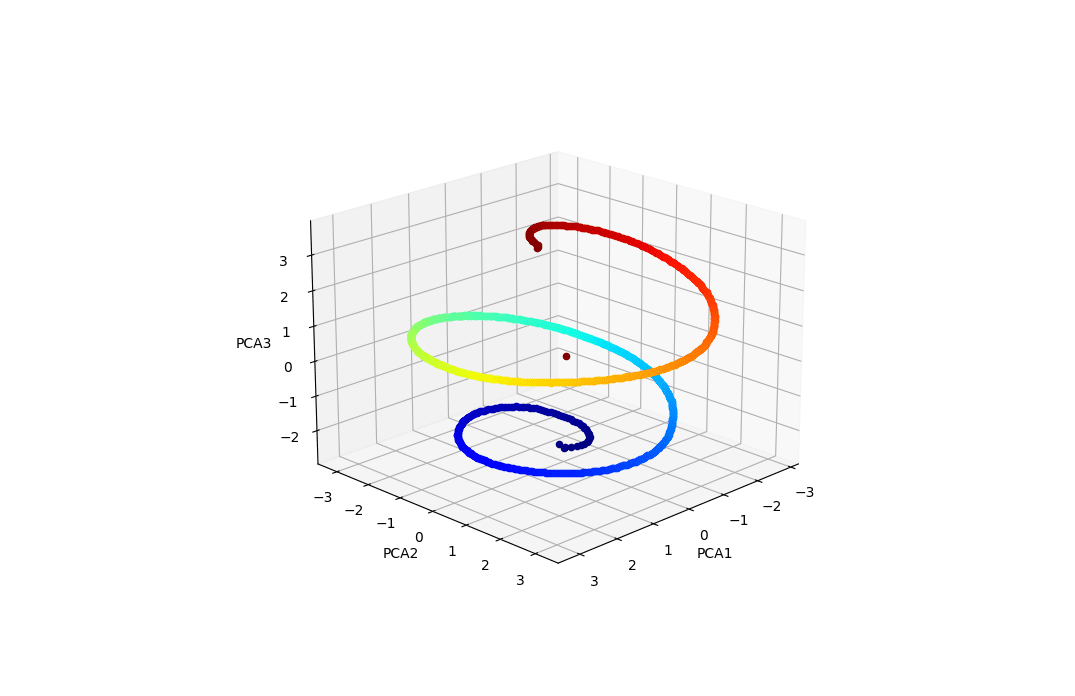

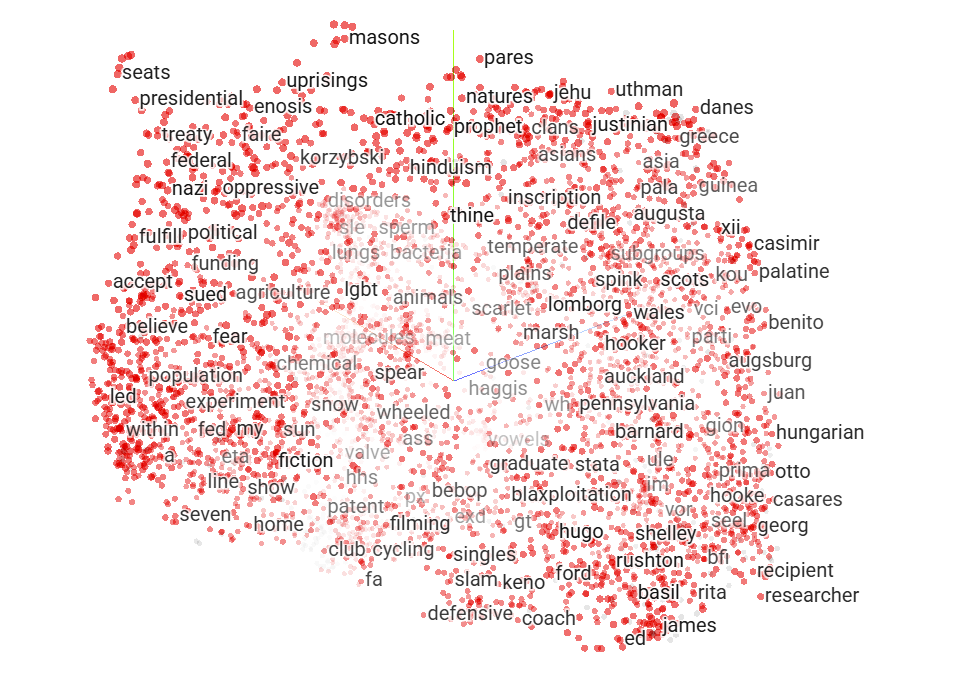

🧠2.Token Embeddings in LLMs They’re also often called vector embeddings or word embeddings. 🔤 From Tokens to Token Embeddings Before a model can “read” anything, it first breaks text into smaller chunks called tokens. Each token is then assigned a unique token ID, a simple integer from the vocabulary. For example: Text …

Read More